Navigating the modern AI stack

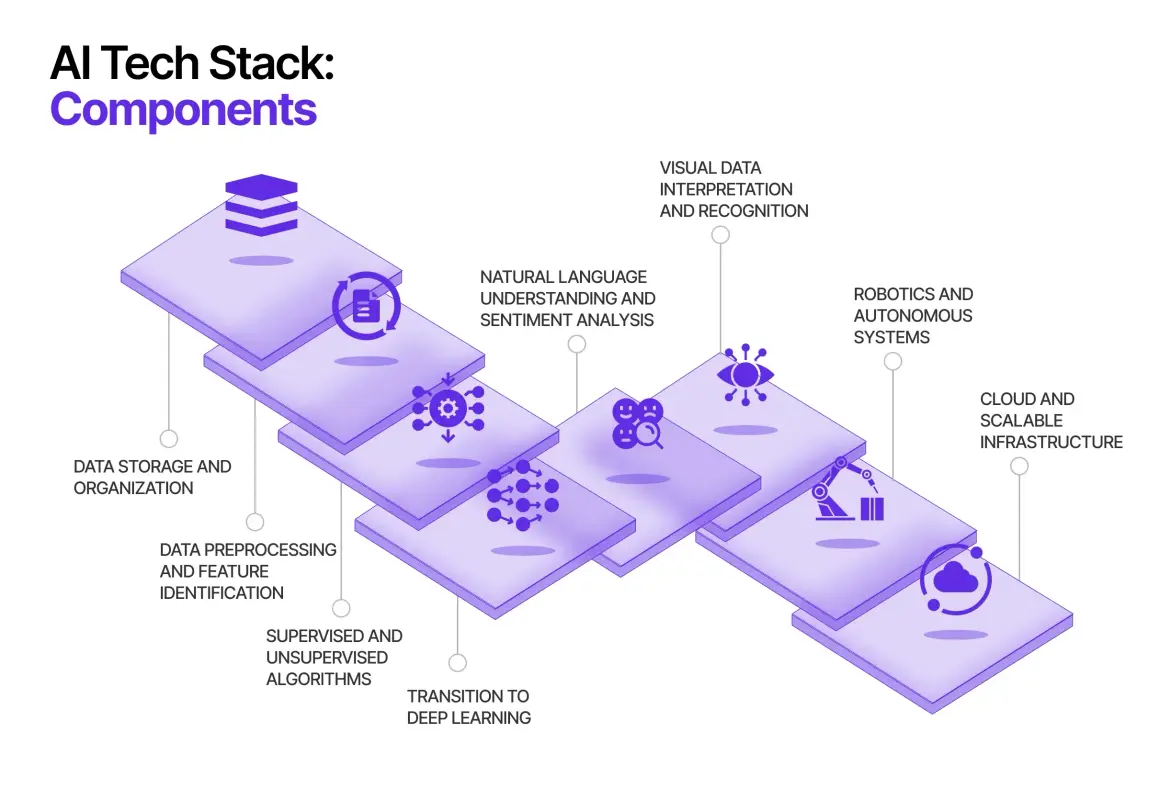

In the landscape of artificial intelligence, the architecture of these AI systems has evolved significantly, leading to the emergence of the modern AI stack. This intricate ecosystem comprises a multitude of technologies and methodologies tailored to streamline the development, deployment, and management of AI applications. This article aims to provide a comprehensive technical exploration of the modern AI stack, elucidating its key components, underlying principles, and practical implementations.

Data Management Layer: At the foundation of the modern AI stack lies the data management layer, which serves as the bedrock for AI-driven insights. This layer encompasses a spectrum of processes and technologies aimed at acquiring, storing, processing, and curating data. Data lakes, such as Apache Hadoop and Amazon S3, provide scalable storage solutions for diverse data types. Data warehouses, exemplified by Google BigQuery and Snowflake, offer structured storage and analytics capabilities. Real-time streaming platforms like Apache Kafka and Apache Flink facilitate the processing of continuous data streams, enabling timely insights and decision-making. Moreover, data governance frameworks ensure compliance with regulatory requirements and organizational policies, safeguarding data integrity and privacy.

Model Development Layer: The model development layer represents the realm where raw data is transformed into actionable insights through the creation of machine learning models. This intricate process encompasses tasks such as feature engineering, model selection, hyperparameter tuning, and evaluation. Popular machine learning frameworks, including TensorFlow, PyTorch, and scikit-learn, provide a rich suite of tools and algorithms for model development. Additionally, AutoML platforms such as Google Cloud AutoML and H2O.ai automate parts of the model development pipeline, democratizing AI capabilities and accelerating time-to-deployment. Domain-specific libraries and frameworks, such as Keras for deep learning and XGBoost for gradient boosting, cater to specialized use cases, further enriching the model development ecosystem.

Model Deployment Layer: Once trained, machine learning models need to be seamlessly integrated into production environments, where they can infer predictions in real-time. The model deployment layer encompasses a plethora of technologies and methodologies tailored to orchestrate the deployment and scaling of models. Containerization platforms, exemplified by Docker and Kubernetes, offer lightweight, portable environments for packaging and deploying models across diverse infrastructure environments. Serverless computing frameworks, such as AWS Lambda and Azure Functions, abstract away the underlying infrastructure complexities, enabling cost-effective and scalable model deployment. Furthermore, model serving frameworks like TensorFlow Serving and MLflow streamline the deployment and management of models in production settings, ensuring reliability, scalability, and low latency.

Monitoring and Management Layer: Effective monitoring and management are indispensable for ensuring the performance, reliability, and security of AI systems in production. This layer encompasses a suite of tools and practices aimed at monitoring model performance, detecting anomalies, and managing infrastructure resources. Time-series databases like InfluxDB and Prometheus store and analyze metrics related to model inference, resource utilization, and system health. Visualization tools such as Grafana and Kibana provide interactive dashboards for monitoring key performance indicators (KPIs) and identifying potential bottlenecks. Additionally, anomaly detection techniques, including statistical methods and machine learning algorithms, enable proactive identification and mitigation of anomalous behaviors. Continuous integration and continuous deployment (CI/CD) pipelines automate the deployment and testing of model updates, fostering agility and reliability in AI development workflows.

Ethical and Regulatory Considerations: Beyond technical considerations, the modern AI stack must address a myriad of ethical and regulatory challenges associated with AI applications. This entails ensuring fairness, transparency, and accountability in algorithmic decision-making processes. Fairness-aware machine learning techniques, such as disparate impact analysis and fairness constraints, mitigate biases and discrimination in model predictions. Explainable AI (XAI) methods, including feature importance analysis and model interpretation techniques, enhance the interpretability and trustworthiness of AI systems. Moreover, compliance frameworks such as GDPR and HIPAA impose stringent requirements for data protection, privacy, and consent, necessitating robust governance and compliance mechanisms in AI architectures. Tools and frameworks for privacy-preserving machine learning, such as federated learning and differential privacy, enable collaborative model training while preserving data privacy and confidentiality.

The modern AI stack is a sophisticated amalgamation of technologies, methodologies, and best practices aimed at enabling the development of robust, scalable, and ethical AI applications. By comprehensively exploring the key components and underlying principles of the modern AI stack, organizations can navigate the complexities of AI architecture more effectively and harness the transformative power of AI in the digital age. Embracing a holistic approach to data management, model development, deployment, monitoring, and ethics is paramount for building trustworthy and resilient AI systems that drive innovation and value creation across diverse domains and industries.